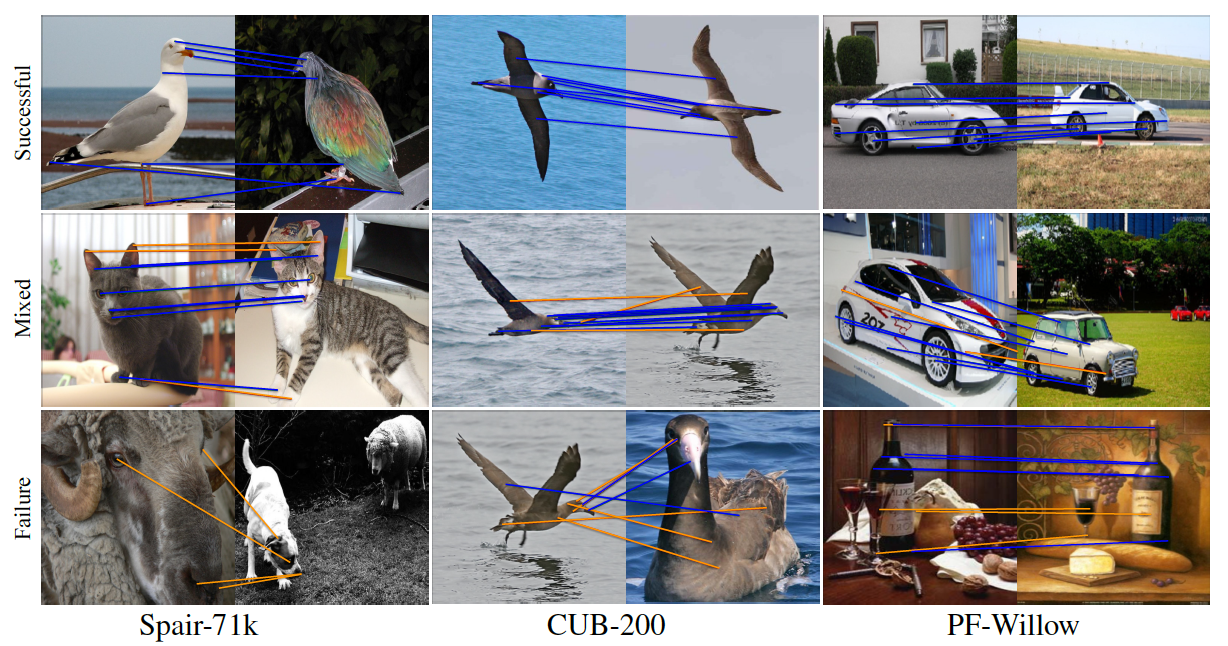

Example succesful, mixed and failure cases are shown across the 3 datasets. Interestingly it can be seen that even the failure cases tend to map to reasonable regions of the image.

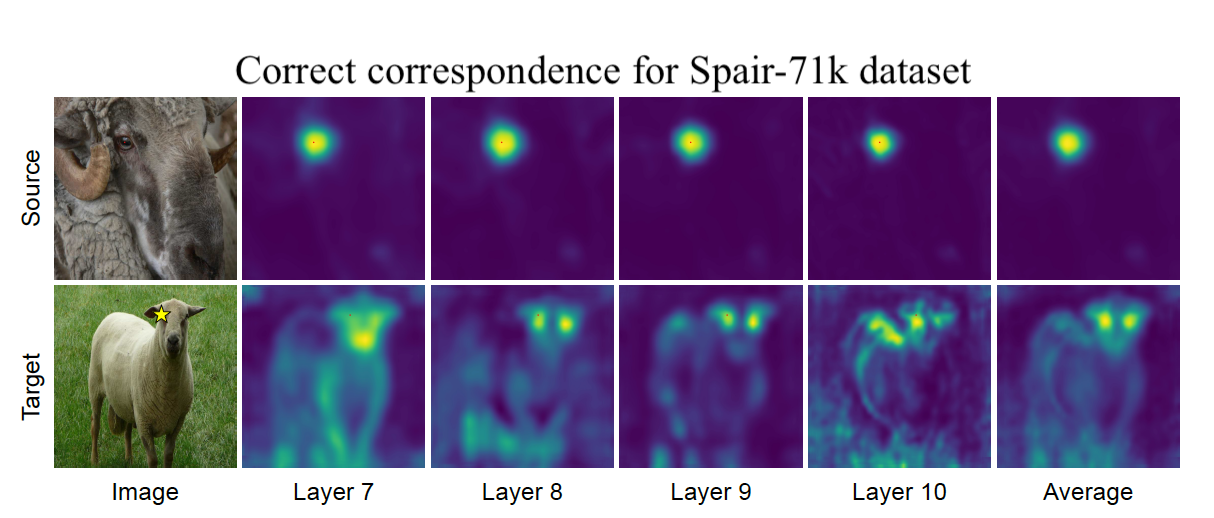

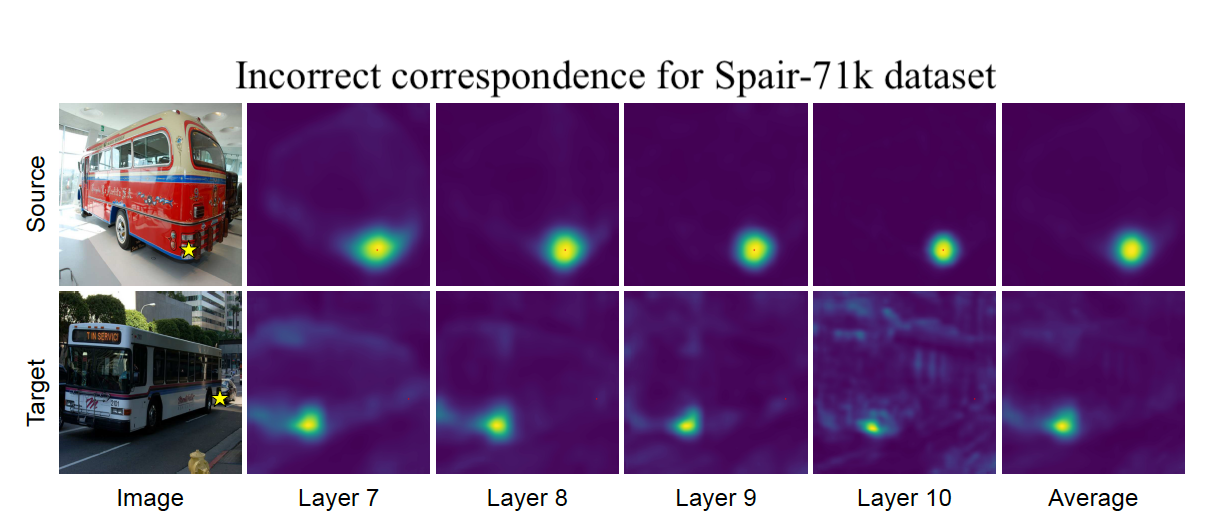

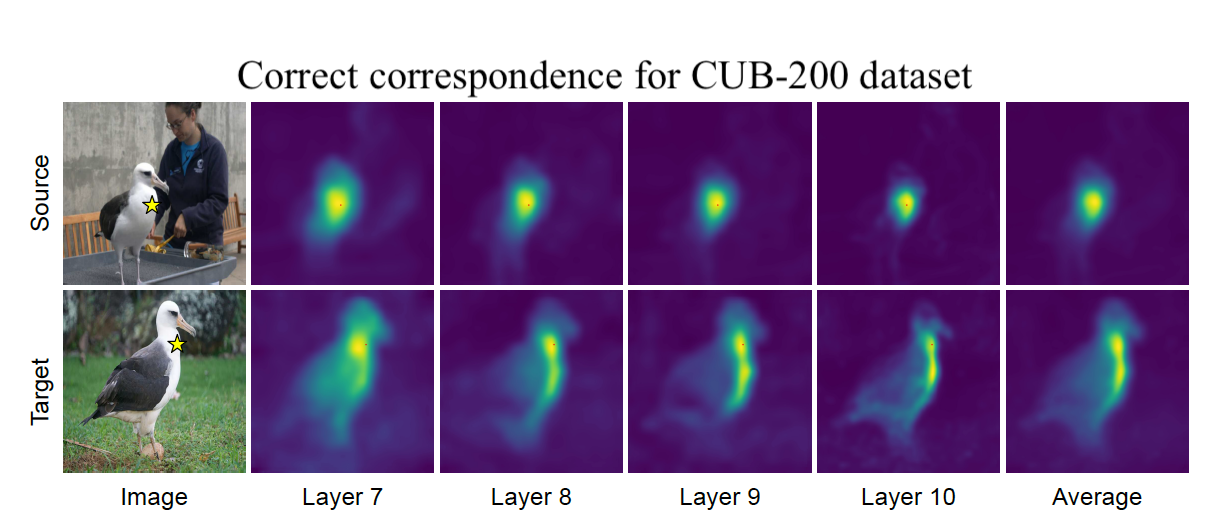

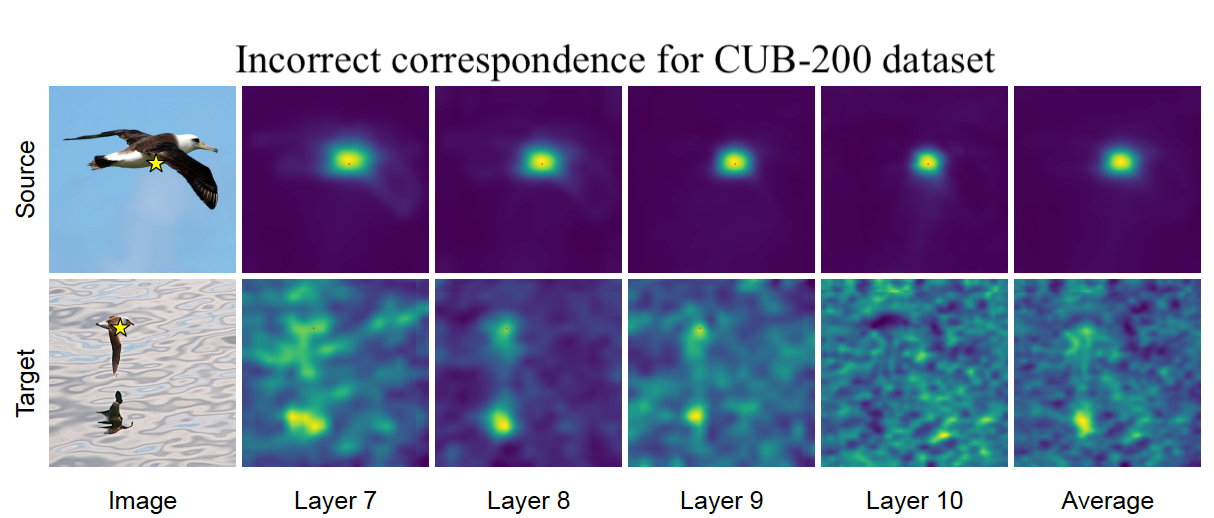

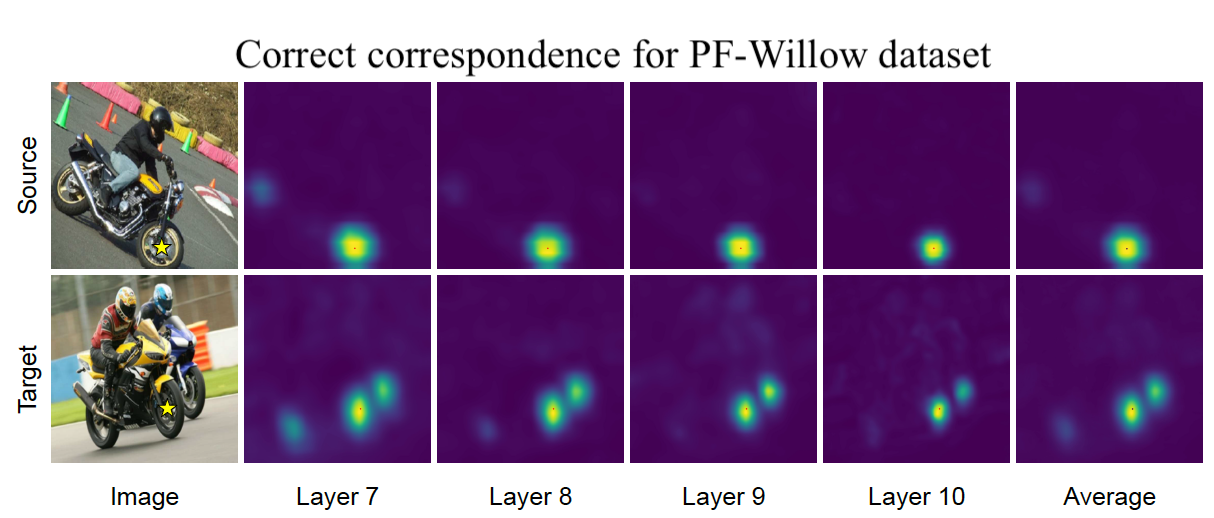

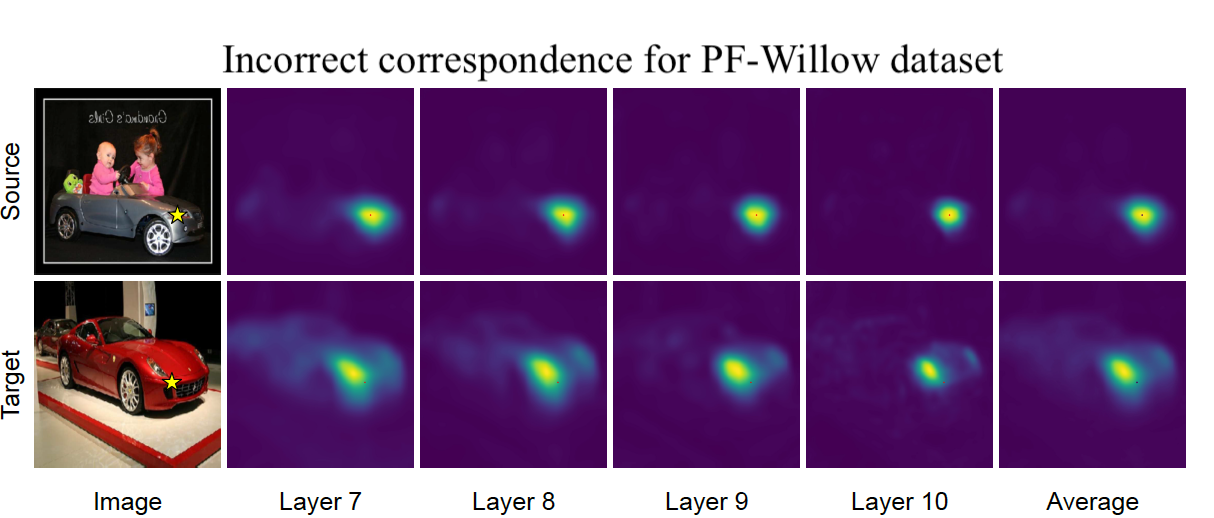

Attention maps for both correct and incorrect correspondences across Spair-71k, PF-Willow and CUB-200 are visualized for the layers indicated. Source and target locations are displayed as yellow stars on the source and target images respectively.

Concurrent Work

There's a lot of excellent work that was introduced around the same time as ours.

Diffusion Hyperfeatures consolidates multi-scale and multi-timestep feature maps from Stable Diffusion into per-pixel feature descriptors with a lightweight aggregation network.

A Tale of Two Features introduces a fusion approach that capitalizes on the distinct properties of Stable Diffusion (SD) features and DINOv2 by extracting per pixel features from each.

Emergent Correspondence from Image Diffusion extracts per pixel features from Stable Diffusion.