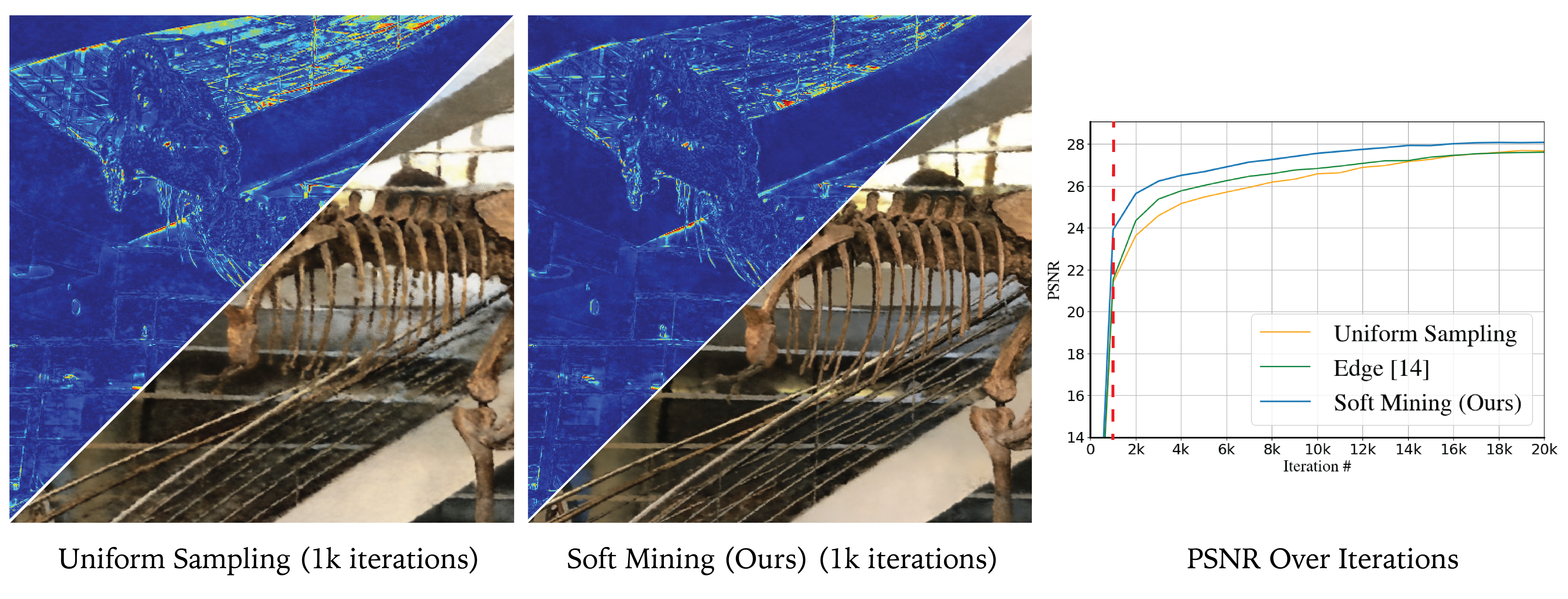

We present an approach to accelerate Neural Field training by efficiently selecting sampling locations. While Neural Fields have recently become popular, it is often trained by uniformly sampling the training domain, or through handcrafted heuristics. We show that improved convergence and final training quality can be achieved by a soft mining technique based on importance sampling: rather than either considering or ignoring a pixel completely, we weigh the corresponding loss by a scalar. To implement our idea we use Langevin Monte-Carlo sampling. We show that by doing so, regions with higher error are being selected more frequently, leading to more than 2x improvement in convergence speed.

NeRF Example - Synthetic Dataset. The evolution of test PSNR during the training of our Soft Mining Sampling technique compared to the Uniform Sampling baseline is illustrated using the 'Mic', 'Lego', and 'Chair' image from the NeRF Synthetic dataset. The orange curve indicates the test PSNR of the uniform sampling baseline, while the blue curve represents the test PSNR of our method. The yellow arrow indicates the current position on the curve.

NeRF Example - LLFF Dataset. The evolution of test PSNR during the training of our Soft Mining Sampling technique compared to the Uniform Sampling baseline is illustrated using the 'Trex, 'Fortress', and 'Fern' image from the LLFF dataset. The orange curve indicates the test PSNR of the uniform sampling baseline, while the blue curve represents the test PSNR of our method. The yellow arrow indicates the current position on the curve.

2D Image Fitting Example. The evolution of test PSNR during the training of our Soft Mining Sampling technique compared to the Uniform Sampling baseline is illustrated using the 'Pluto' and 'Tokyo' image for 2D image fitting application with a batch size of 512. The orange curve indicates the test PSNR of the uniform sampling baseline, while the blue curve represents the test PSNR of our method. The yellow arrow indicates the current position on the curve. The samples along with the error map is also provided.

@article{kheradsoftmining2023,

author = {Shakiba Kheradmand, Daniel Rebain, Gopal Sharma, Hossam Isack, Abhishek Kar, Andrea Tagliasacchi, Kwang Moo Yi},

title = {Accelerating Neural Field Training via Soft Mining},

journal = {Arxiv},

year = {2023},

}